Character AI platforms, such as Character.AI, are designed to provide personalized and interactive experiences through simulated human-like conversations. These platforms are widely used for various purposes, including companionship, learning, and entertainment. One of the key features of these platforms is censorship, which is implemented to ensure that interactions remain safe, respectful, and appropriate for all users.

Character AI Platform and Its Uses

Character AI platforms are becoming increasingly popular due to their versatility and the advanced AI technology they employ. Here are some common uses:

- Companionship: Many users turn to AI companions for emotional support and friendship. These AI entities can provide a listening ear and engage in meaningful conversations, making them valuable for those seeking companionship.

- Learning and Tutoring: AI characters can assist with educational content and provide tutoring in various subjects. They can answer questions, explain complex topics, and offer personalized learning experiences.

- Entertainment: From role-playing games to creative storytelling, AI characters enhance entertainment experiences by engaging users in interactive and immersive narratives.

- Customer Service: Businesses use AI characters to provide efficient customer service and support. These AI entities can handle routine inquiries, provide information, and assist with problem resolution.

Explanation of Censorship Features and Their Purpose

Censorship on Character AI platforms is designed to protect users and ensure a positive interaction environment. The main purposes of censorship include:

- Safety: To protect users from harmful content, including explicit language, hate speech, and cyberbullying.

- Compliance: To ensure that interactions comply with legal regulations and platform policies.

- User Experience: To maintain a respectful and positive environment for all users, promoting healthy and constructive interactions.

For example, Character.AI employs AI algorithms to detect and filter out inappropriate content automatically. This includes filtering explicit language, hate speech, and any content that could be deemed harmful or offensive.

Importance of Understanding Platform Policies and Terms of Service

It is crucial for users to understand the policies and terms of service of their chosen Character AI platform. These policies outline the acceptable use of the platform and the extent of censorship applied. Ignoring these guidelines can lead to negative consequences, such as account suspension or termination.

- Transparency: Most platforms provide detailed information about their content moderation practices and the rationale behind them. For instance, Character.AI has a comprehensive Safety Center that outlines their content moderation policies and practices.

- User Control: Some platforms offer limited user control over censorship settings, allowing adjustments within specified parameters. However, core safety measures typically remain in place to protect all users.

- Responsibility: Users should be aware of their responsibility to adhere to platform guidelines and contribute to a positive community environment. Engaging with AI characters responsibly helps maintain the integrity and safety of the platform.

By understanding these aspects, users can better navigate their interactions with Character AI platforms and make informed decisions about their usage.

Understanding Censorship in Character AI

Censorship in Character AI platforms is a crucial component designed to maintain a safe, respectful, and constructive environment for users. Let's delve into what censorship entails, its purposes, the types of content typically censored, and the policies governing these measures.

Definition and Purpose

Censorship in Character AI refers to the moderation of content to prevent the dissemination of harmful, explicit, or inappropriate material. The primary purposes of censorship are to:

- Protect Users: Ensure that interactions do not expose users to offensive, harmful, or dangerous content.

- Maintain Compliance: Adhere to legal regulations and platform-specific guidelines.

- Enhance User Experience: Foster a positive, respectful, and enjoyable interaction space.

For instance, Character.AI's censorship mechanisms are designed to automatically filter out content that violates their community standards, ensuring that AI-generated responses remain within acceptable boundaries.

Types of Censorship

Character AI platforms typically censor several types of content to uphold their standards. These include:

- Explicit Language: Blocking profanity and vulgar language to keep conversations clean and appropriate for all users.

- Sensitive Topics: Restricting discussions related to violence, abuse, and other sensitive subjects to protect users' mental and emotional well-being.

- Hate Speech: Preventing any form of discriminatory or hateful language directed towards individuals or groups based on race, gender, religion, sexual orientation, etc.

- Personal Information: Protecting user privacy by blocking the sharing of personal details such as addresses, phone numbers, and other identifying information.

According to Character.AI’s Safety Center, these measures are in place to prevent harmful or offensive interactions and to promote a safe environment for all users.

Platform Policies

Each Character AI platform has specific policies regarding censorship, aimed at maintaining a safe and respectful user experience. Here are some examples:

- Character.AI:

◦ Content Moderation: Character.AI employs automated tools to block violating content before it can be posted. This includes filtering explicit language, hate speech, and other prohibited content as outlined in their Community Standards.

◦ User Reporting: Users can report content that they believe violates the platform’s terms of service. The Trust & Safety team promptly addresses flagged content, taking actions such as warning users, deleting content, suspending accounts, and more.

- OpenAI's ChatGPT:

◦ Ethical Guidelines: OpenAI integrates content moderation directly into its AI models to prevent the generation of harmful or biased content. They continuously update their models to improve safety and user experience, as noted in their official documentation.

- Replika:

◦ Moderation Policies: Replika uses AI algorithms to detect and censor inappropriate content automatically. They also allow users to enable or disable mature content filters, providing some level of customization while ensuring core safety measures are maintained.

Impact of Censorship on User Experience

While censorship is crucial for safety and compliance, it can impact user experience in various ways:

- Positive Impact: By filtering harmful content, censorship helps create a safe and enjoyable interaction space, especially important for younger users or those seeking supportive conversations.

- Negative Impact: Overly strict censorship may limit creative expression or restrict discussions on certain topics, leading some users to feel constrained.

Balancing these impacts is an ongoing challenge for Character AI platforms. Continuous user feedback plays a critical role in refining and improving censorship policies to better align with user preferences while maintaining safety standards.

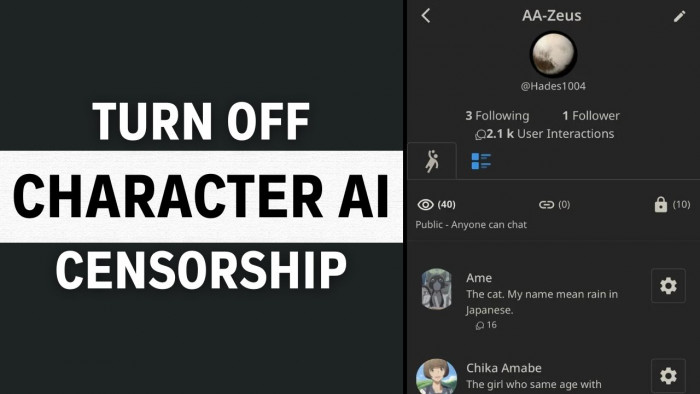

Checking Platform Settings

Exploring and understanding the settings on your Character AI platform can help you navigate censorship features and customize your interaction experience. Here’s how you can check and adjust these settings effectively.

User Settings

Accessing and adjusting user settings on Character AI platforms typically involves a few simple steps:

- Accessing Settings:

◦ Character.AI: To access the settings on Character.AI, navigate to your profile by clicking on your avatar or the settings icon, usually found in the top right corner of the screen. This will open the settings menu where you can adjust various preferences.

- Navigating Settings:

◦ Profile Customization: In the settings menu, you can adjust your profile details, such as your display name, avatar, and personal preferences.

◦ Content Filters: Look for sections related to content moderation or safety settings. These options may include toggles for “Safe Mode,” “Mature Content,” or similar filters.

Content Filters

Character AI platforms provide some level of control over content filters to help tailor user experiences. Here’s how to identify and modify these filters:

1. Adjustable Filters:

◦ Character.AI: Within the settings menu, you can find options to enable or disable mature content filters. This allows you to customize the level of censorship applied to your interactions.

◦ Replika: Similar to Character.AI, Replika provides settings to toggle mature content filters, enabling users to adjust the sensitivity of their AI interactions.

2. Customizing Interactions:

◦ Keywords and Phrases: Some platforms allow users to set specific keywords or phrases that the AI should avoid or prioritize, helping to tailor the conversation flow to your preferences.

◦ Topic Preferences: Users can indicate preferred topics for conversation, which the AI will prioritize, ensuring that discussions remain relevant and engaging.

Limitations

Despite the available settings, there are inherent limitations to user-controlled censorship adjustments on Character AI platforms:

1. Non-Adjustable Filters:

◦ Character.AI: While some content filters can be adjusted, others, such as those blocking explicit language or hate speech, are non-adjustable to ensure user safety and compliance with platform policies. The Community Standards emphasize maintaining a respectful environment, necessitating these strict filters.

2. Algorithmic Constraints:

◦ Pre-Programmed Algorithms: The AI models are programmed with specific censorship parameters that are not user-adjustable. These include restrictions on discussing illegal activities, violence, and other sensitive subjects.

◦ AI Learning: Continuous learning algorithms refine censorship rules over time based on user interactions and feedback. This can lead to changes in moderation practices without direct user input.

3. Privacy and Safety:

◦ User Safety: Platforms prioritize user safety, which means that certain protections, such as preventing the sharing of personal information, cannot be disabled. This is crucial for protecting user privacy and preventing harmful interactions.

◦ Legal Compliance: Adhering to regional laws and regulations is essential, and platforms must ensure their censorship policies comply with these standards globally. This often results in non-negotiable content restrictions.

Official Stance on Disabling Censorship

Understanding the official policies of Character AI platforms regarding censorship is crucial for users who wish to explore the possibility of turning off or adjusting these features. Here’s a detailed look at the stance of major Character AI platforms, including Character.AI, on disabling censorship, the feasibility of doing so, and the potential risks involved.

Platform Guidelines

Character.AI: Character.AI is committed to providing a safe and respectful interaction environment, which is why it implements strict content moderation policies. According to the Character.AI Safety Center, the platform uses both AI algorithms and human moderators to filter out inappropriate content.

◦ Content Moderation: Automated tools are employed to block violating content before it can be posted. This includes explicit language, hate speech, and any content that promotes violence or illegal activities.

◦ User Reporting: Users have access to tools that allow them to report inappropriate content. The Trust & Safety team reviews these reports and takes appropriate actions, such as warning users, deleting content, or suspending accounts.

Replika: Replika also focuses on creating a safe environment by using AI moderation to censor inappropriate content. Users can enable or disable mature content filters to some extent, but core safety measures remain in place to prevent harmful interactions.

OpenAI’s ChatGPT: OpenAI’s models are designed with built-in content moderation to prevent the generation of harmful, biased, or explicit content. The moderation policies are integral to the AI models and are continually updated to enhance safety and user experience.

Disabling Censorship

Most Character AI platforms, including Character.AI, do not allow users to completely disable censorship due to several reasons:

- Safety and Compliance: Ensuring user safety and adhering to legal standards are top priorities. Disabling censorship could expose users to harmful content and lead to legal issues.

- Ethical Considerations: Platforms aim to promote ethical use of AI. Allowing unrestricted content could enable misuse, such as spreading misinformation, harassment, or illegal activities.

- Quality Control: Maintaining high-quality interactions is crucial. Censorship helps prevent the degradation of user experience by filtering low-quality or inappropriate content.

Potential Risks

Disabling censorship, where possible, comes with significant risks:

- Exposure to Harmful Content: Without censorship, users may encounter explicit language, hate speech, or other offensive material, leading to a negative experience.

- Legal and Ethical Issues: Allowing unrestricted content could result in violations of regional laws and ethical standards, potentially leading to legal actions against the platform and its users.

- User Safety: Disabling censorship increases the risk of cyberbullying, harassment, and other harmful interactions, compromising user safety.

Alternatives to Disabling Censorship

While fully disabling censorship on Character AI platforms may not be feasible or advisable due to safety and compliance reasons, there are alternative methods to achieve a more personalized and less restrictive interaction experience. Here are some viable alternatives:

Custom Characters and Scenarios

One of the most effective ways to tailor your interactions without disabling core censorship features is by creating custom characters and scenarios. Many Character AI platforms, including Character.AI, offer tools to design characters and set specific interaction parameters.

1. Character Customization:

- Character.AI: Allows users to create and customize characters by defining their personality traits, backstory, and dialogue style. By doing so, users can guide the AI to respond in ways that align with their preferences while still adhering to the platform's content guidelines.

- Replika: Offers customization options where users can adjust their AI companion's personality traits and conversation topics to create a more personalized experience.

2. Scenario Creation:

Users can set specific scenarios or contexts for interactions, which helps to steer the conversation in a desired direction. This method ensures that the AI operates within a framework that meets user expectations while respecting the platform's censorship policies.

Third-Party Tools

While using third-party tools to modify or bypass censorship settings is not recommended due to potential safety and legality risks, it is worth noting that some users might explore these options. However, users should be cautious and consider the implications:

1. Potential Risks:

◦ Security Concerns: Third-party tools might expose users to security vulnerabilities, including data breaches and malware.

◦ Legal Issues: Bypassing platform restrictions could lead to violations of terms of service, resulting in account suspension or legal action.

◦ Quality Control: Third-party modifications can degrade the quality of interactions and lead to unintended consequences.

2. Safe Alternatives:

◦ Instead of using unauthorized tools, users can look for official plugins or extensions provided by the platform that offer additional customization options while maintaining safety standards.

Feedback to Developers

Providing constructive feedback to platform developers is a proactive way to advocate for more flexible censorship settings. Engaging with the development community can lead to improvements in user experience:

1. User Surveys:

◦ Participate in user surveys or beta testing programs offered by the platform. This allows users to share their experiences and suggest enhancements directly to the developers.

◦ Character.AI Community: Engage with the Character.AI Community to discuss desired features and provide feedback.

2. Feature Requests:

◦ Submit feature requests through official channels, such as the platform's support page or forums. Articulate the need for more customizable content filters and provide specific examples of how these changes could improve the user experience.

Case Studies and User Experiences

Exploring case studies and user experiences can provide valuable insights into how users navigate censorship settings on Character AI platforms and how they manage to achieve their desired interactions within the given constraints. Here are a few examples:

Case Study 1: Customizing Characters on Character.AI

User Profile:

- Name: Emily

- Purpose: Creative storytelling and role-playing

- Platform: Character.AI

Experience: Emily enjoys using Character.AI for creative storytelling and immersive role-playing games. Initially, she found the platform's censorship features restrictive when discussing certain fantasy scenarios. However, by customizing her characters and scenarios, she managed to align the interactions with her creative needs while adhering to platform guidelines.

Steps Taken:

- Character Customization: Emily used Character.AI's tools to create custom characters with specific traits and backgrounds, guiding the AI to respond in ways that fit her storytelling.

- Scenario Setting: She set detailed scenarios, including context and goals for each interaction, which helped steer conversations in a desired direction without triggering censorship filters.

- Feedback and Improvement: Emily provided feedback to Character.AI about her experience, suggesting enhancements to content filters that could support more diverse creative expressions.

Outcome: By customizing her characters and scenarios, Emily successfully engaged in meaningful storytelling while respecting the platform's content moderation policies. Her feedback also contributed to potential improvements in the platform's customization features.

Case Study 2: Adjusting Content Filters on Replika

User Profile:

- Name: John

- Purpose: Emotional support and companionship

- Platform: Replika

Experience: John uses Replika for emotional support and companionship. He initially struggled with the platform's censorship of mature content, which he felt limited the depth of conversations. By adjusting Replika's content filters, John found a balance that allowed for more open interactions while maintaining a safe environment.

Steps Taken:

- Enable Mature Content: John enabled the mature content filter in Replika's settings, allowing for more nuanced conversations that still adhered to the platform's safety standards.

- Personalization: He personalized his AI companion's personality traits to match his preferences, ensuring that interactions felt genuine and supportive.

- Ongoing Feedback: John regularly provided feedback to Replika's support team, sharing his experiences and suggesting ways to improve content moderation settings.

Outcome: John achieved more meaningful and supportive interactions with his AI companion by adjusting the content filters and personalizing the AI's traits. His ongoing feedback helped Replika understand user needs better and refine their content moderation policies.

User Testimonials and Community Insights

Forum Discussions:

- Character.AI Community: Users often discuss their experiences and share tips on customizing interactions within the community forums. For instance, users recommend setting specific keywords and phrases to guide conversations effectively while adhering to censorship policies (Character Support).

- Reddit Threads: On Reddit, users of Character.AI and other platforms share their strategies for navigating content filters and achieving desired interactions. Common suggestions include detailed scenario creation and providing consistent feedback to developers.

Success Stories:

- Creative Writing Groups: Writers using Character.AI for brainstorming and character development have shared success stories about how they customize their AI interactions to generate unique and creative content while respecting platform guidelines.

- Educational Uses: Teachers using Character AI for tutoring report positive experiences when setting specific educational scenarios and customizing AI responses to fit curriculum needs.

Conclusion

Navigating the intricacies of censorship on Character AI platforms can be challenging, but understanding the policies, exploring alternative methods, and leveraging community insights can significantly enhance your user experience. Here’s a summary of the key points discussed:

Summary of Key Points

1. Understanding Censorship:

◦ Censorship is implemented to ensure safe, respectful, and appropriate interactions.

◦ Common censored content includes explicit language, hate speech, and sensitive topics.

2. Platform Settings:

◦ Users can adjust certain settings to customize their interactions.

◦ Core safety measures, however, are non-negotiable to protect all users.

3. Official Stance:

◦ Major platforms like Character.AI do not support disabling censorship entirely.

◦ The focus remains on user safety, ethical AI use, and legal compliance.

4. Alternatives to Disabling Censorship:

◦ Customizing characters and scenarios.

◦ Providing feedback to developers to advocate for more flexible settings.

◦ Being cautious with third-party tools due to potential risks.

5. Case Studies and User Experiences:

◦ Real-world examples highlight how users successfully navigate censorship settings.

◦ Community insights and user feedback are valuable for improving platform policies.

Emphasis on Adhering to Policies

While the desire for more open interactions is understandable, adhering to platform policies ensures a safe and respectful environment for all users. Understanding and respecting these guidelines is crucial for maintaining the integrity and positive experience of Character AI platforms.

Encouragement to Explore Alternative Methods

Users are encouraged to explore the available customization options within the boundaries of platform policies. Creating custom characters, setting detailed scenarios, and actively engaging with the community can help achieve more personalized and satisfying interactions.

Comments